Eagle-Lanner tech blog

- Информация о материале

- Автор: Super User

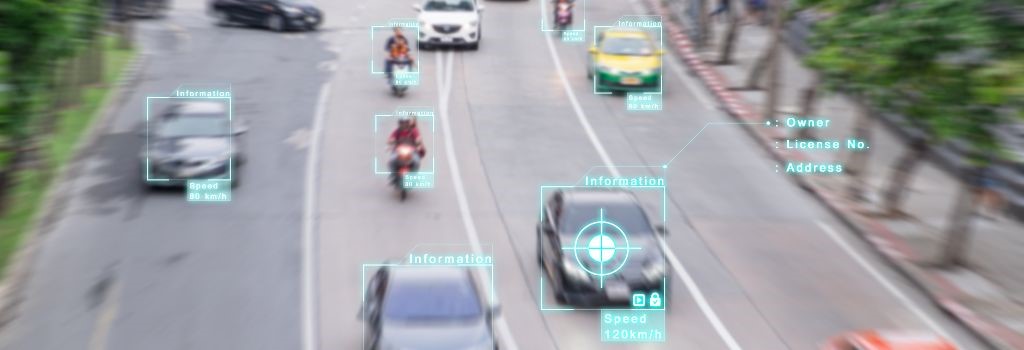

As artificial intelligence continues to advance, combining visual data analysis with natural language processing has become a transformative innovation for enterprises. One solution in this domain is image-to-text generative AI technology, which combines advanced object detectors with extensive training on visual and language datasets. This technology combines advanced object detectors with extensive training on visual and language datasets, allowing the model to break down images and video frames into individual components such as objects, people, and locations. By generating detailed descriptions that can be queried using natural language prompts or automatically through an API, this sophisticated interaction between image analysis and language understanding sets this technology apart in the realm of computer vision.

Подробнее: Deploying Image-to-Text Generative AI at the Network Edge

- Информация о материале

- Автор: Super User

The evolution of edge computing has led to the creation of highly efficient and powerful solutions, necessary for processing data closer to its source. One standout solution in this landscape is the Converged Multi-access Edge Computing (MEC) Server, often described as a "Data Center in a box." This scalable, all-encompassing system integrates multiple functionalities into a single unit by using software-defined architecture, distinguishing itself from traditional dedicated architectures.

Подробнее: Consolidating Edge Workloads with Converged MEC Servers

- Информация о материале

- Автор: Super User

The integration of AI and edge computing has unlocked numerous possibilities across various industries. One particularly transformative application is the deployment of Large Language Models (LLMs) on private 5G networks. This combination promises not only enhanced data security and privacy but also improved efficiency and responsiveness. In this blog post, we explore how to build a secure LLM infrastructure using a Edge AI Server on a private 5G network.

Подробнее: Building a Secure, private Large Language Models (LLM) on Private 5G Network

- Информация о материале

- Автор: Super User

To meet the evolving demands of high-performance computing (HPC) and AI-driven data centers, NVIDIA has introduced the MGX platform. This innovative modular server technology is specifically designed to address the complex needs of modern computing environments, offering scalable solutions that can adapt to the varying operational requirements of diverse industries. With its future-proof architecture, the NVIDIA MGX platform enables system manufacturers to deliver customized server configurations that optimize power, cooling, and budget efficiencies, ensuring that organizations are well-equipped to handle the challenges of next-generation computing tasks.

Подробнее: Empowering the Edge AI with the NVIDIA MGX Platform

- Информация о материале

- Автор: Super User

In the dynamic realm of Edge AI appliances, security, efficiency, performance, and scalability stand as critical pillars. Intel® Xeon® 6, with the codename Sierra Forest, rises to meet these needs with state-of-the-art technology. As the inaugural processors to harness the new Intel 3 process node, the Xeon® 6 processors offer substantial power and performance enhancements. Optimized for diverse applications, including web and scale-out containerized microservices, networking, content delivery networks, cloud services, and AI workloads, Sierra Forest delivers robust performance consistency and prioritizes power efficiency. It provides reliable performance, superior energy efficiency, and maximized rack and core density for comprehensive workload management.

Подробнее: The Edge of Innovation: Intel® Xeon® 6 Drives Next-Generation Network AI Performance

- Информация о материале

- Автор: Super User

In the era of high-speed connectivity and digital transformation, the convergence of Artificial Intelligence (AI) and 5G technology has revolutionized industries and redefined user experiences. By deploying AI algorithms at the network edge, organizations can minimize latency, reduce bandwidth consumption, and optimize resource utilization, all of which are critical factors for maximizing the benefits of 5G technology.

- Информация о материале

- Автор: Super User

In today’s enterprise networks, the proliferation of cyber threats poses significant challenges to organizations worldwide. Artificial Intelligence (AI) is emerging as a game-changer, revolutionizing how we approach network security. Let’s delve into why AI in cybersecurity is not just advantageous but essential for safeguarding against evolving threats.

Подробнее: Leveraging AI in Network Security: Enhancing Protection and Efficiency